TLDR

Key Metrics:

Large language models (LLMs) are the breakthrough technology of our time, and companies are racing to integrate these computational marvels into their products. deepset's AI Platform (formerly known as deepset Cloud) drastically reduces the overhead associated with building a production-ready application powered by LLMs, freeing teams to focus on the product itself.

In this blog post, we’ll take you on a tour of our platform’s core principles to help you decide whether your team would benefit from a cloud-based platform for building with LLMs.

The promise of large language models

The amazing triumph of LLMs following the release of ChatGPT in November 2022 comes down to two main factors: their ability to understand and reproduce language and knowledge, and their incredible versatility. LLMs can be used to generate content, chat with customers, and perform other complex tasks previously reserved for humans alone, such as generating SQL queries and reviewing code.

We’re nowhere near the limits of what these models can accomplish. This makes it all the more exciting to be at the forefront of building with LLMs. But it also brings with it some unique challenges.

Your project, carried by the cloud

When it comes to AI-powered products, your team of data scientists, backend engineers, and product designers should focus on the quality and usefulness of the application they’re building. However, that’s only possible if the heavy lifting – such as the scaling of compute resources and managing multiple pipelines – is taken care of.

Enter deepset. Our AI platform lets you design, deploy, monitor, and evaluate your AI application in one clean and straightforward UI (you can watch the demo video here). It also manages compute resources seamlessly in the background, while functioning as a unified environment for cross-functional teams to create the best product possible: powered by LLMs, and by your company’s data.

deepset's core design principles

Let's take a closer look at the key pillars of our platform to see exactly how it helps organizations – from legal and financial institutions to online news media – build LLM-powered products in a fast, clean, and easy-to-use way.

Hedge your bets in the high-paced LLM space

LLMs use real-world textual training data as a basis to learn human-like reasoning. New LLMs are appearing all the time: smaller models that require less processing power, customizable open-source LLMs, or models that can use huge context windows as the basis for their output.

In such a fast-moving field, it is essential to stay on top of new developments – and equally important not to get locked into any one model or model vendor. That is why deepset is built with composability in mind: our proven pipeline paradigm allows teams to build systems with the flexibility to run different models independently. This modular approach also means that you can easily adapt your system to changing user needs without having to redesign it from scratch.

Build with the user experience in mind

Your product is only useful if it solves your users' pain points. That's why we encourage developers to think about user needs from day one. And why we built the deepset AI Platform with easy prototyping and feedback in mind: within the UI, you can set up multiple versions of your system and test them directly with real users. Quantitative metrics let you compare different setups side-by-side at a glance.

Note that there’s no need to build anything yourself: every prototype comes with a user interface and a link, as well as highly intuitive feedback features. Your users will love it!

Accept only the highest level of security

When it comes to sensitive proprietary data, you can not be too careful. That's why deepset meets the highest security standards and has passed the SOC 2 Type II cloud provider security framework. As a result, our customers sleep well at night knowing that their data is stored securely and in compliance with the latest security protocols.

For customers who are concerned about sending their proprietary data to companies like OpenAI – within the LLM prompt, or even to fine-tune a model – deepset can connect to open source models on AWS SageMaker.

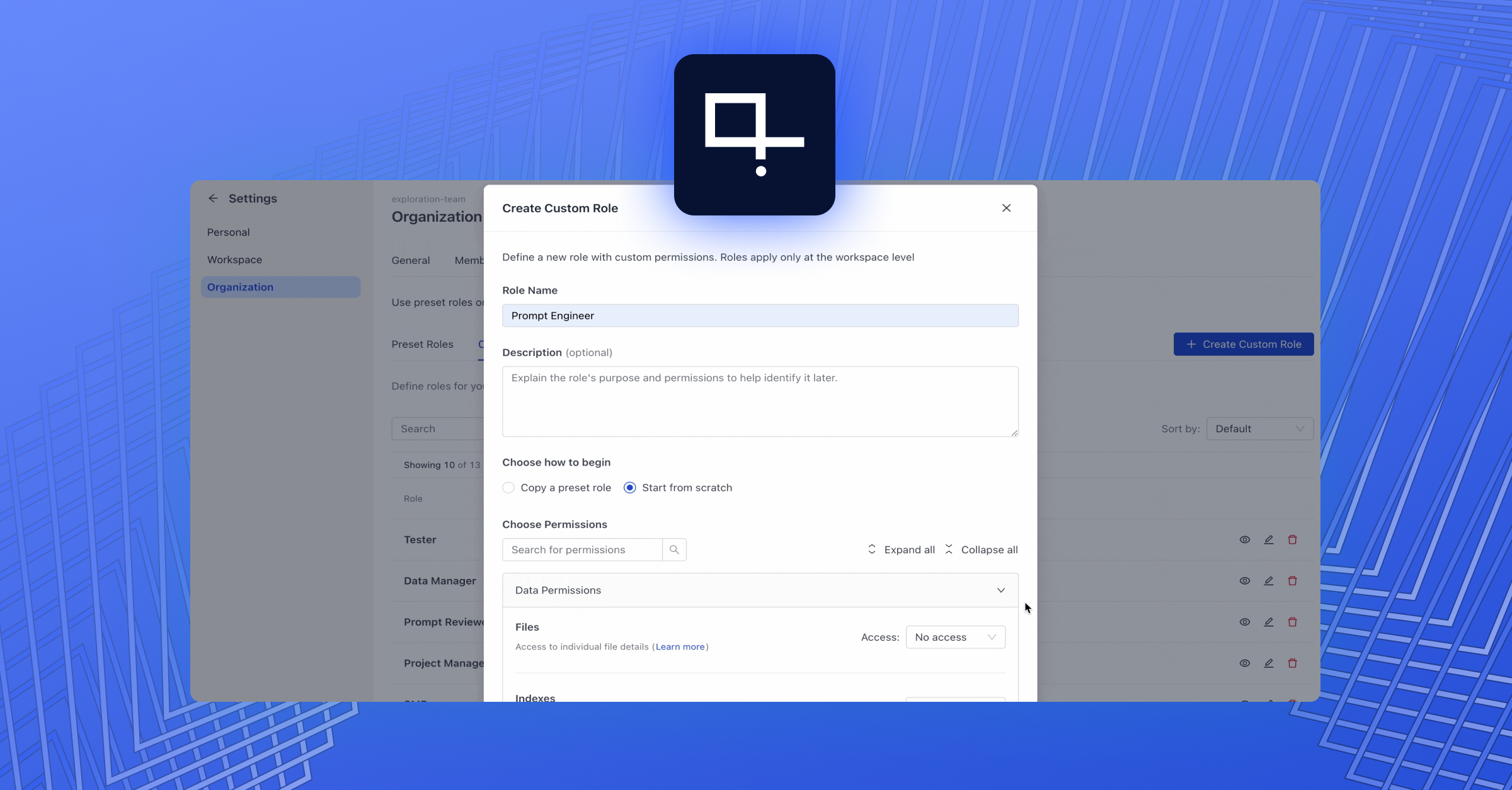

Align your stakeholders for LLM adoption

In traditional data science workflows, there can be a lack of transparency. Teams may spend months or even years on designing the perfect system, without ever testing it with real users or showing it to stakeholders.

However, experience shows that a practice of rapid iterations is much more likely to turn your project into a success story. That is why deepset allows you to instantly demo your preliminary product to all types of stakeholders, regardless of their technical background.

Follow best LLMOps practices

As impressive as LLMs may be – within a production-ready application, they are just one element of a huge and intricate software system with lots of moving pieces. So when building with LLMs, it’s important to stick to the best practices long established by software developers:

- Elasticity: LLM-powered pipelines deployed with deepset scale with your user base. The cloud-native architecture seamlessly adjusts to increased usage and saves you money during low-traffic times.

- Managing data at scale: deepset takes care of the entire data lifecycle: from the ingestion of gigabytes of text to data processing and vectorization, to storage in a vector database. This can be a one-time event as well as a continuous process.

- Evaluation: deepset places a huge emphasis on transparency. Find the best possible configuration using the built-in quantitative and qualitative evaluation tools.

- Monitoring: deepset offers extensive observability features. It lets you monitor your pipelines’ output in real time, allowing you to keep an eye on your system’s behavior and take action when needed.

- Agility: We respond to new challenges quickly. For instance, we were among the first to develop a successful mechanism for combatting LLM hallucinations – which we promptly integrated into deepset.

Why choose deepset?

deepset has been empowering enterprises to apply NLP and LLMs for over five years. Generative AI, which has evolved at lightning speed over the past few months, has long been on our radar – along with other groundbreaking technologies like information extraction and semantic search.

Haystack, our open-source framework for building standalone applications with LLMs, is a comprehensive toolbox beloved by AI experts and newcomers alike. deepset leverages Haystack technology, and has inherited its composable and flexible philosophy.

Through its fully-fledged cloud-based development and inference platform for enterprise AI teams, deepset adds ease of use, quick prototyping-feedback cycles, and a powerful, scalable backend architecture.

Curious about building AI Apps and Agents?

Table of Contents