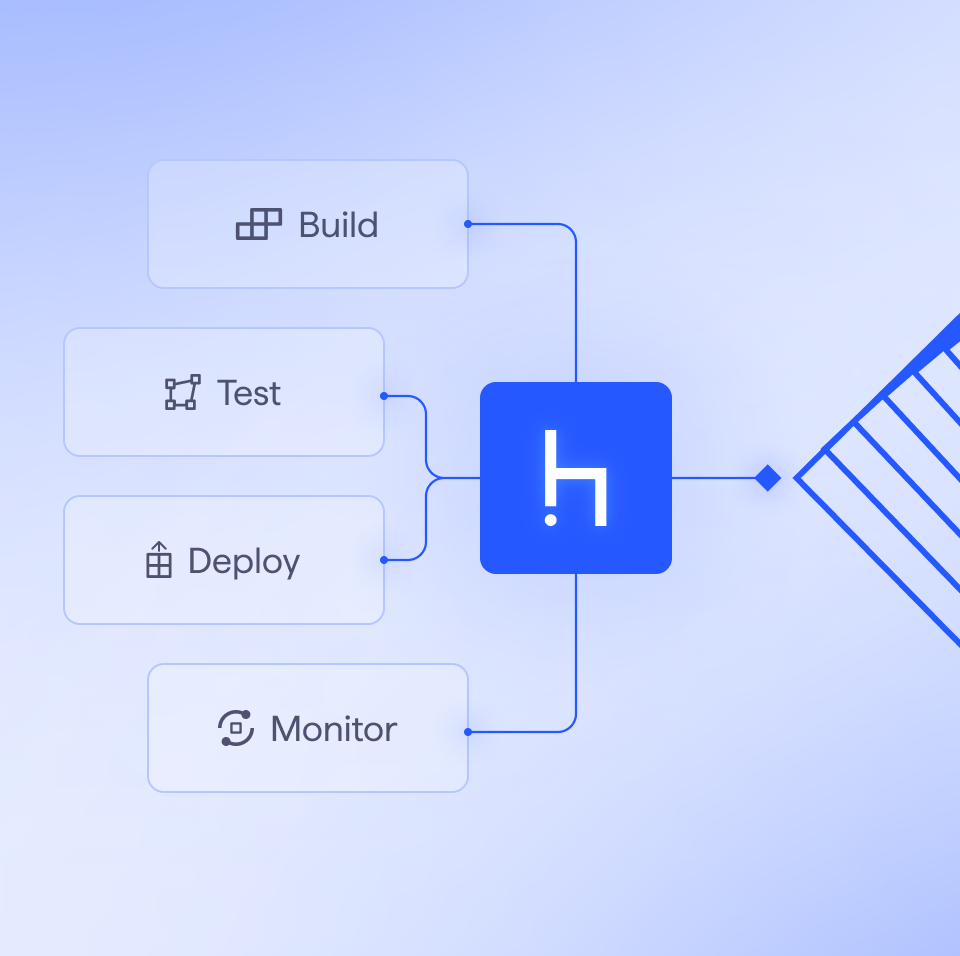

The Haystack Enterprise Platform, built on the Haystack open source gramework, is a unified solution for developing and deploying custom, enterprise-grade AI agents and applications across any deployment type (cloud, hybrid, on-prem). It supports modular AI architectures including agents, RAG, intelligent document processing (IDP), semantic search, text-to-SQL, and more. Organizations use the Haystack Enterprise Platform to accelerate development, lower costs, control governance, and access expert enterprise support - empowering them to innovate faster and stay ahead in the rapidly evolving AI landscape.

The Haystack Enterprise Platform was formerly known as deepset AI platform.

%20copy.webp)

%20copy%204.webp)

%20copy%206.webp)

%20copy.webp)

%20copy%2021.webp)

%20copy.png)

%20copy%206.png)

.png)

.png)

%20copy%203.png)

%20copy%208.png)

%20copy%209.png)

%20copy%203.png)

.webp)

%20copy%202.webp)

%20copy%206.png)

%20copy.webp)

.png)