TLDR

Key Metrics:

30 November 2022 was the day that generative AI went viral. OpenAI's GPT models had been around and discussed among NLP practitioners for some time, but they were just one Transformer-based paradigm among many. But that November, OpenAI made the GPT-3 model accessible to hundreds of millions of people by turning it into an application.

If you want to move beyond models and build products that people use and love, it's useful to understand what that means in practice. In this blog, we'll discuss the four main factors that distinguish a viral application like ChatGPT from a model like GPT-3–and how you can apply these insights to ensure your organization successfully adopts and benefits from generative AI.

1. UI/UX

UI/UX design is perhaps the most underestimated difference between a model and an application. Without a UI, the model is just raw capability that requires coding to access. A good UI makes those capabilities accessible, while guiding users in meaningful ways to use them.

Going back to the ChatGPT example, by turning their LLMs into a browser application operated by a simple search bar, their developers were able to present the capabilities of the LLM to the user in a way that was easy to use and understand, turning the bare technology into a viable product. The same is true of Perplexity, Claude, and other browser-based AI products.

So far, we've talked about models. But what users of AI-powered products are interacting with today is much more than a model – it's a complex Compound AI system consisting of many different components, such as data storage, document search and information retrieval for interpreting ad hoc data, different ML models of varying complexity and size, and interfaces to other software.

Users don't need to know the details of such an implementation. What they care about is usability and usefulness. So the UI serves as the entry point through which the user can interact with the underlying Compound AI system in the most intuitive way. Even if you change that system in a major way by adding individual components or entire sub-systems, the UI can remain the same.

2. Monitoring/Evaluation

LLMs are hard enough to evaluate on their own. But when you build a system like the one we just described, with many interacting components, many of them non-deterministic, you really need to think hard about putting monitoring at the center of your product mindset. Today, this is done by collecting as many signals as possible from your system to create traces: Reports of how a piece of information moved through the Compound AI system and how it was modified along the way.

By logging everything that happens in your system, including traces, you gain maximum insight into your system's behavior. This allows you to:

- Trigger alerts when something goes wrong,

- Inspect the system, including its inputs and outputs, if you observe an undesirable pattern over time,

- Understand bottlenecks and other faulty designs in your system, and

- Identify potential cost or time savings.

Granular monitoring of your Compound AI systems is essential and generates valuable data for further analysis.

3. Model and vendor agnosticism

Perhaps due in part to the enormous hype surrounding LLMs – their size, cost, and training details – many people have the impression that when they use an application for, say, coding, translation, or image creation, they're interacting directly with a generative model. As we've explained above, this is not true: The UI can hide a complex underlying system with many interacting components.

This means that a Compound AI system is not tied to a single model, or even a single type of model. Instead, the system should be updated regularly to ensure that it is still performing at its best in terms of capabilities, latency, and cost.

LLMs are expensive, and you should only use them for tasks they are good at. For example, you generally don't want to use an LLM to do math, because it's a) more expensive than a regular calculator, and b) not really built for that, since it's trained to generate word tokens stochastically. So the Claude application, for example, outsources calculations, along with data analysis and visualization, to a JavaScript-based "analysis tool". The same goes for tasks like information extraction, sentiment analysis, and many other tasks that are better handled by non-generative AI.

4. Deep AI expertise

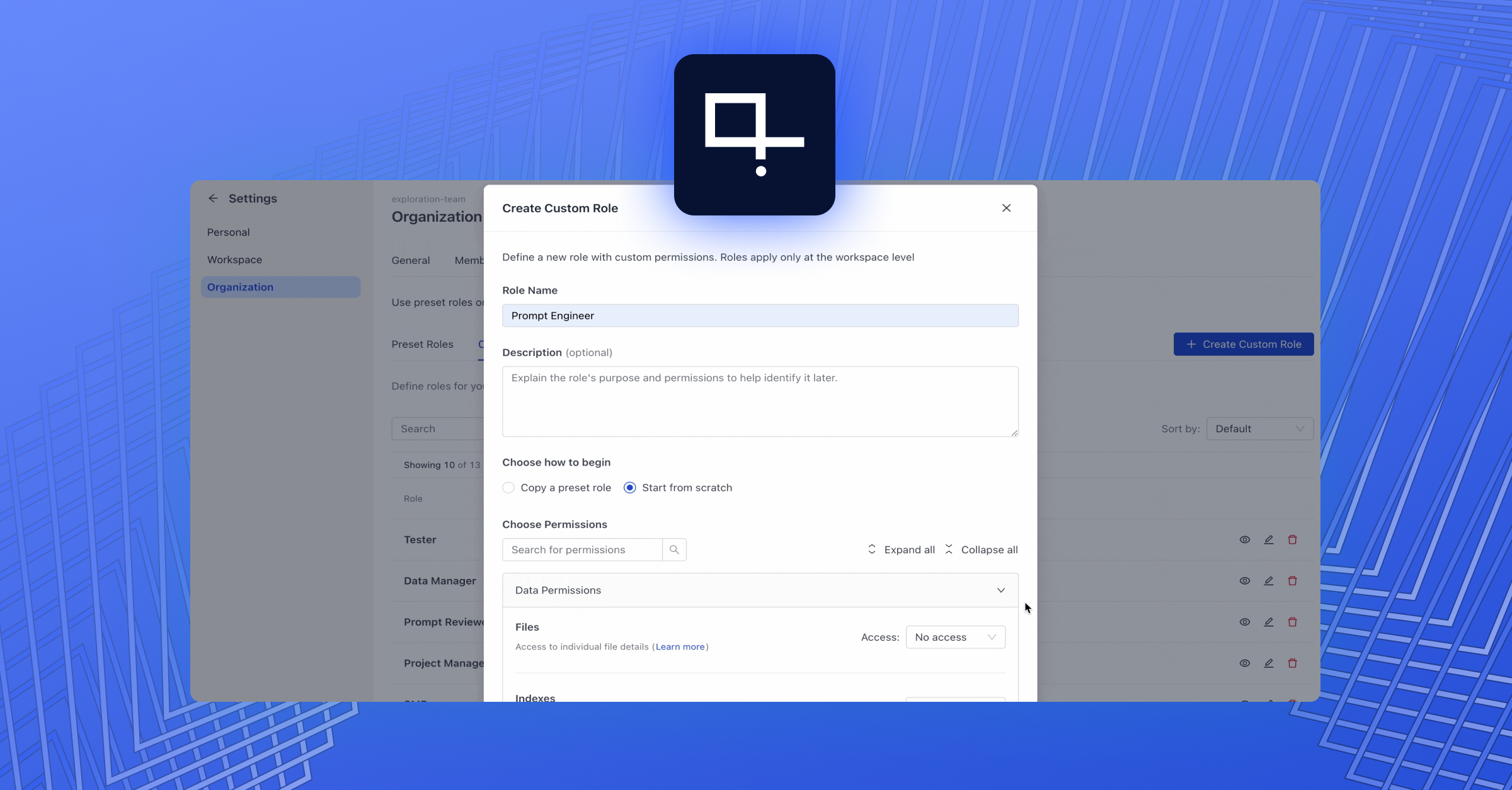

deepset offers AI expertise and technology to organizations in financial services, aerospace, manufacturing, publishing, technology, the public sector, and more. This gives us great insight into the customization needs to support use cases for a wide variety of consumers. Despite their differences, these use cases share common steps when building Compound AI systems, such as:

- Adapting the system architecture

- Choice of models

- Database integration

- Data preprocessing

- Retrieval workflows

- Prompt engineering

- Custom code

- AI guardrails

This is a lot to consider, and most organizations need help getting it right. They should work with AI experts who have built and customized complex systems before and understand best practices for designing, deploying, monitoring, and maintaining them.

At deepset, we have a team of AI engineers who not only understand the technology, its evolution, and its inner workings, but also how to best harness its power in Compound AI systems–from enterprise search to agents– that tangibly impact your business. They can make informed recommendations about models and how to maximize their potential, while ensuring regulatory compliance and maintaining user trust throughout the process.

Our take

To build successful AI products, look beyond the model hype. Instead, focus on:

- Implementing intuitive interfaces that guide users,

- Robust monitoring to understand system behavior,

- Choosing the right tools for each task, whether it's an LLM or a simpler solution, and

- Outcome-focused AI expertise.

It's not the latest model that matters, but how effectively you turn AI capabilities into user value.

Curious about building AI Apps and Agents?

Table of Contents